Page 1 of 2

Re: What is the best routine for graphics compression?

Posted: Wed Sep 25, 2019 12:56 pm

by Joefish

Another problem with compression - it's fine if you've got a ROM cartridge or disk to store your compressed data, but if you have to hold it in RAM and hold the expanded version in RAM then you have to decide if it's worth it.

For screens, you can uncompress them straight to the screen memory, so it's not a huge issue. But for game sprites, data, etc. you really need to be achieving 50% compression or it's just not worth it.

For example, if you have two levels in a game and each is 2K of data, that's 4K in total. If you compress it, you're still going to need 2K of unpacked live level data for your game. Now if you can pack your level data by 50% such that each level is now only 1K, then your 2K of live upacked data plus 2 x 1K of compressed data takes up the same space as before, 4K, so you've just broken even.

Now four levels would have taken up 8K, but now take up only 2K + 4 x 1K = 6K, so now you're saving memory. But if your compressed level data isn't shrinking by much, then it's not worth it.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

I've been doing some experiments with Einar's code as I've now got it into my head to compress the title screen of Go-Go BunnyGun and use it as an attract mode even in the 48K game, and even though I'm already running short of memory!

Using the linear screen re-arrangement helps the compression, but an algorithm I wrote myself also helps. That works from the bottom of the screen up, XOR-ing each line with the one above. So where the line is the same as above, you just get empty pixels. It doesn't help on highly detailed screens, or ones with lots of stippling, but for a manga-style image like mine with vertical lines and big blocks of colour, it leaves large blank areas and shaves a few hundred bytes off the compressed screen. Which is now down to under 4K.

What I want to do is try some experiments to see if I can optimise the image itself for compression; maybe reducing the detail a bit as well, and see how significant that is in the compressed file size.

I'm also trying to work out if it's worth compressing the sprites individually or as a block. An 8-frame sprite animation is 720 bytes, and some have only minor changes between frames, so 50% compression is readily achievable. That improves a little if I concatenate several sprites in one block, but makes it more awkward to unpack and extract the ones I want for a level.

Of course, sprites common to all levels (explosions, weapon pod) shouldn't be compressed at all as that would just be a waste of memory.

Re: What is the best routine for graphics compression?

Posted: Fri Sep 27, 2019 11:58 am

by Joefish

Hmm - I seem to have written a compression routine that actually runs on the Speccy itself and compresses my title screen even further, down to 3742 bytes. (The re-rodered ZX7 encoding gave 4247 bytes). Not sure how well it'll fare with other screens, but for the sort of thing people had drawn for the recent Hobbit update - lots of lines and large patches of colour - I suspect it could be pretty good. Though I need to verify it is doing everything reliably and I haven't made a big mistake somewhere. And that's still a big chunk of memory that wouldn't be available to the game!

I need to optimise the cack out of the post-processor stage and probably the decoder too. It's not massive, but I'm no expert and writing super-efficient code.

I want to see if I can do something more efficient for my sprite data too, though it (like my screen code) would take advantage of a bit of knowledge of the sprite format. I've got an idea for improving the encoding of back-referencing small sections of repeated runs in small blocks of data. The question then is whether using two or more custom encoding systems saves enough memory to have more than one decoder programmed in...

Re: What is the best routine for graphics compression?

Posted: Fri Sep 27, 2019 2:19 pm

by Ast A. Moore

I use RLE for the title and the award ribbon in Yankee. I simply count empty (zero) bytes in vertical rows and write down the number of consecutive empty bytes. The decompressor is very fast and it saves quite a few bytes in images that contain a lot of blank bytes. I do decompress them directly onto the screen, so it’s worth it.

You could, I suppose, apply compression to some level data. If you then always decompress it to the same pre-allocated portion of RAM, it seems like a viable option. Maybe you could also pre-populate that area with some graphics, if you’re going to regularly copy them onto the screen. Then again, sprite graphics usually don’t compress well.

Re: What is the best routine for graphics compression?

Posted: Fri Sep 27, 2019 2:49 pm

by Joefish

Well, I refined my earlier idea of XORing rows and added a function to calculate the row addresses in pixel order. So each pixel row is XORed with the one above. And similarly, the attributes sweep backwards one byte at a time, XORing with the previous byte in memory. The result of this is that repeated data gets turned into lots and lots of zeroes. Large blocks of pixels become just a line across the top and a few dots down the sides. Stipples become alternate solid and clear lines. I then use a 0 bit in the compressed data stream to encode a zero byte, so the more of those can be generated the better. Everything else is encoded with a 1 followed by further data, that is either literal bytes, or a limited dictionary of the most common values. I tried a full dictionary but it wasn't worth it - it took too many bits to identify an entry compared to just supplying a literal byte value. That might be a useful approach for text compression too, rather than encoding a full dictionary. And has the advantage that you could decode any part of the data at any time, since there are no back-references.

My earlier one for title graphics - you might like this - takes advantage of the fact that no-one ever uses the FLASH bit. For each character, the attribute comes first. If there's no FLASH bit then the next byte is an 8-bit mask. This tells you for which bytes in the 8 rows of the character there is data to come. Set bits in the mask use the next byte in the data stream for the pixel byte. Any blank bits in the mask result in a 0 byte being written. So a full character takes 10 bytes. But a half-character only 6. The trick is that if the FLASH bit is set, it's first stripped from the attribute, but then the pixel mask byte is re-used from the previous character. So it might encode the same pattern of bytes present in the character, or if the mask is 0, then another empty character is drawn. It simply eliminates all the blank bytes in an image. And it does it using whole bytes, rather than faffing around with an arbitrary stream of bits. I think I also had a set of bytes for each line where each bit meant 'copy the previous attribute and process accordingly'.

Re: What is the best routine for graphics compression?

Posted: Sat Sep 28, 2019 2:24 pm

by Morkin

Joefish wrote: ↑Fri Sep 27, 2019 2:49 pmMy earlier one for title graphics - you might like this - takes advantage of the fact that no-one ever uses the FLASH bit. For each character, the attribute comes first. If there's no FLASH bit then the next byte is an 8-bit mask. This tells you for which bytes in the 8 rows of the character there is data to come. Set bits in the mask use the next byte in the data stream for the pixel byte. Any blank bits in the mask result in a 0 byte being written. So a full character takes 10 bytes. But a half-character only 6. The trick is that if the FLASH bit is set, it's first stripped from the attribute, but then the pixel mask byte is re-used from the previous character. So it might encode the same pattern of bytes present in the character, or if the mask is 0, then another empty character is drawn. It simply eliminates all the blank bytes in an image. And it does it using whole bytes, rather than faffing around with an arbitrary stream of bits. I think I also had a set of bytes for each line where each bit meant 'copy the previous attribute and process accordingly'.

That's quite good.

I guess depending on what you're printing, e.g. if it's static graphics, you could also use the BRIGHT bit for something as well? Not sure what.

Re: What is the best routine for graphics compression?

Posted: Sat Sep 28, 2019 10:38 pm

by TomD

I created a compression routine for exactly this purpose, being able to compress "cutouts" of the screen for titles or small blocks of graphics for intros but can be used for anything. You can read more about it here

http://www.tomdalby.com/other/lzf.html

It has two modes for the "cutouts", static and moveable. If you need to move the "cutout" each time you paste to screen then moveable should be used and the only real difference is the decompressor is larger at 151bytes vs. the static version at 92bytes. Full screen is also in the utility.

Any questions let me know.

TomD

Re: What is the best routine for graphics compression?

Posted: Sat Sep 28, 2019 10:41 pm

by TomD

If you want to see an example of it working try this

https://tomdalby.com/speccy/cobra_w.tap

Meanwhile on my computer :)

Posted: Sun Sep 29, 2019 4:57 pm

by catmeows

Re: What is the best routine for graphics compression?

Posted: Wed Oct 02, 2019 4:58 am

by Bedazzle

What about megalz packer?

Re: What is the best routine for graphics compression?

Posted: Wed Oct 02, 2019 7:15 am

by catmeows

Bedazzle wrote: ↑Wed Oct 02, 2019 4:58 am

What about megalz packer?

https://github.com/emmanuel-marty/lzsa/ ... /README.md

Here is quite handy chart showing compression/speed ratio.

Re: What is the best routine for graphics compression?

Posted: Wed Oct 02, 2019 12:20 pm

by Joefish

Generic byte packers aren't always the best at compressing graphics though. If you can take advantage of some knowledge of how graphics data is more likely to repeat, then you can optimise the compressor to pack that data. Einar's re-arranges a staggered Spectrum screen to line-by-line order to bring potential repetitions closer together, which makes them easier for an LZ77-type algorithm to compress. My alogrithm pre-processes the graphics to turn common repetitions into zeroes, which my packing routine can optimise above all other values, a bit like a RLE algorithm would do.

Re: What is the best routine for graphics compression?

Posted: Wed Oct 16, 2019 8:28 pm

by Joefish

Hmm. Just been meddling with a screen compressor that gives some really good results on the selection of screens I was using. It gets Einar's

Cobra pic down to just 1945 bytes. But try it on that pack of Russian screens and it's not so good. It 'compresses' MAC's

Phantis screen 'down' to only 7123 bytes!

(that's more than it was originally, in case you didn't notice)

(that's more than it was originally, in case you didn't notice)

But on a couple of examples like that

BatmanTheCapedCrusader I get 4957 bytes compared to 5457 (I think) for RCS + ZX7

It works best on patches of colour and lines - multiple levels of stipple and colour changes just make it worse. It might be good for something like that

Hobbit update.

I should probably tweak the algorithm so it just stops trying when faced with too much changing data...

Re: What is the best routine for graphics compression?

Posted: Thu Oct 17, 2019 10:00 am

by catmeows

How it works ?

Honestly - a decent benchmark suite of screens would really help. And I don't mean just full size screens but also in-game illustrations, for example from Case of missing swan and even smaler examples, something of size 6*6 chars like pictures from Rychle Sipy.

Re: What is the best routine for graphics compression?

Posted: Thu Oct 17, 2019 12:24 pm

by Joefish

Well, with smaller graphics there's the question of whether you're expecting to compress the data that makes up a small graphic, and what order the bytes for that are in, or whether you mean to paste the graphic onto an otherwise empty screen and compress the whole screen. As they're different tasks. A whole screen has a fixed format that you can exploit. Maybe known sized graphics have formats that are exploitable too. But arbitrary data of variable length is something different.

My 'MiniDict' technique is to XOR each attribute with the one before, which turns repetitions into zeros.

I then XOR each pixel line with the one above, which again reduces blocks of pixels to just lines and dots around the edges. (What it's not good at is stipples).

Then I do a frequency analysis of what's left, and pick out the 16 most commonly used bytes (other than 0). Again, this takes advantage of the dots that are left by the XOR process.

The encoding is a bitstream as follows:

16 x bytes - common byte mini-dictionary

0 bit -> 0 byte

1,0 bit -> read next four bits, look up byte in mini-dicitonary

1,1 bit -> read next 8 bits as literal byte

I've just added a refinement that after six 0 bits, it then either flags a 1 to continue otherwise it contains a binary number of how many more zero bytes to skip. This helps it skip over large blank areas of the screen. I can post some code later. But it actually runs on a Speccy. Or at least, in an emulator.

Re: What is the best routine for graphics compression?

Posted: Thu Dec 19, 2019 1:07 am

by Lethargeek

Einar Saukas wrote: ↑Mon Sep 16, 2019 5:06 pm

To compress full screen .scr images, I'm sure you will get the best results using

RCS+

ZX7 combined together.

Nope.

Let me remind you of this 3 years old wos thread:

https://www.worldofspectrum.org/forums/ ... compressor

ZX7 (RCS or not) like other Spectrum screen compression tools i'm aware of all have one fundamental flaw: they compress bytes (ie strips) instead of square chunks. In most cases squares have better correlation between the pixels, hence better possible compression ratio.

Also chunky methods are better for compressing small size pictures (ie sprites) - even separate ones. Me planned to write a sprite compressor as well using similar approach but got distracted by other projects and everyday problems.

Re: What is the best routine for graphics compression?

Posted: Thu Dec 19, 2019 3:57 pm

by Joefish

No, because RCS actually turns the screen into 32x one-byte-wide vertical columns for each 2K chunk of screen, as well as re-ordering each column to be in continuous visual order. It's an extremely efficient transformation before doing any LZ type compression, as it still allows horizontal back-references within a 2K rolling window, but makes the more common vertical back-references very small (apart from the jump every screen third). I thought it was line-by-line, but a quick glance at his source code shows it uses columns.

The main thing going for MiniDict3 though is the compression algorithm runs within a few seconds on the Spectrum itself. It's not the most tightly packed data, but simple chunky images do very well with it. And I'm also not insisting on daft licensing controls.

Re: What is the best routine for graphics compression?

Posted: Thu Dec 19, 2019 4:38 pm

by Lethargeek

Joefish wrote: ↑Thu Dec 19, 2019 3:57 pm

No, because RCS actually turns the screen into 32x one-byte-wide vertical columns for each 2K chunk of screen, as well as re-ordering each column to be in continuous visual order.

in no way this disproves what i'm saying

Joefish wrote: ↑Thu Dec 19, 2019 3:57 pm

It's an extremely efficient transformation before doing any LZ type compression,

...and i'm saying that "LZ type" (or any byte-based) compression is inherently inefficient for zx graphics, regardless of transformations

the compression results speak for themselves, why don't you check yourself?

my zx-pk links from the old thread are dead now but the tool is also available on vtrd:

https://vtrd.in/pcutilz/!LGKV1.1.zip

there's also the attribute optimizer as well:

https://vtrd.in/pcutilz/OPALV1_0.zip

Re: What is the best routine for graphics compression?

Posted: Mon Dec 23, 2019 12:53 pm

by catmeows

Prase, could you share details how it works ?

Re: What is the best routine for graphics compression?

Posted: Mon Dec 23, 2019 3:29 pm

by Lethargeek

catmeows wrote: ↑Mon Dec 23, 2019 12:53 pm

could you share details how it works ?

copypasta from the old wos thread:

No screen reordering, goes through standard Spectrum 8x8 tiles one by one rather than bytes and vertical byte stripes. But first it applies one of several useful xor-filters (not like joefish's predictor from the RCS thread, but simpler one-directional 1-2 pixels shift then xor or with any already processed tile) separately for each tile. You can see the result in the file LgK-xored.bmp after running LgK-pack. These filters not just increase the number of zero bits for normal pics, but also make the remaining 1s distributed in such a fashion that 2x2 square pixel chunks can be packed well with certain prefix code (the same for almost any sensible image, with the result very close to optimal Huffman code packing in most cases, difference usually less than size of the Huffman tree). Again, you can see the statistics for different types of 2x2 chunks running LgK-pack. Filter choice for the each tile is Huffman-packed however, as this data is unpredictable for each screen. No RLE is used for tile data (right now, but maybe never).

Attributes are compressed differently, basically there's a choice of repeating one of the two adjacent former attrs (and in rare cases yet another method is used with two more choices skipping the adjacent ones to next colour) or just load the new attr value from the bitstream (or small list of most frequent values). Repeating the former action (not the former attr!) is coded with one bit, changing action costs 2-3 bits depending on the method. Then, longer sequences of repeats could be reduced with RLE (as there are many complex pics light on attributes, so here it's useful).

Attribute data can be placed (and depacked) either after the each tile or after the whole picture separately. Give "negative" address (same number, just add a minus) to LgK-sfx to have attributes intermixed with tile data (as in the demo). The generated decompressor size will be 2-3 bytes longer in this case.

Basically, xoring maximizes the number of 2x2 pixels chunks (and indirectly the number of bigger square chunks) with none or just one ink pixel inside. Then all the 2x2 chunks are compressed using the same variable-size code for any screen:

Code: Select all

inks code

none 0

1 10xx

2 110xx

2 1110x

4 11110

3 11111xx

On top of that, empty 4x4 and 8x8 chunks might be coded with an extra leading zero bit (this is decided for the whole screen).

Re: What is the best routine for graphics compression?

Posted: Tue Dec 24, 2019 10:26 am

by catmeows

I have tried Phantis (MAC):

zx7(ordered by columns) -> 6546

exomizer(ordered by columns) -> 6305

lgk -> 5713

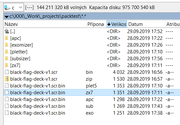

and black-flag-deck:

zx7(ordered by columns) -> 1351

exomizer(ordered by columns) -> 1251

lgk -> 1280

Re: What is the best routine for graphics compression?

Posted: Tue Dec 24, 2019 3:14 pm

by Lethargeek

catmeows wrote: ↑Tue Dec 24, 2019 10:26 am

black-flag-deck:

I couldn't find this by name, but i suspect it's half empty or overly regular. As i planned to make a compressor for an arbitrary rectangular area and the decompressor was big enough already, i never optimized for "lighter" screens (and RLE is only for attributes). On a big selection of screens LgK is usually much better (hundreds of bytes) for about 90% and very close (maybe a bit worse) for the rest.

Theres are also a few pathological cases (see R-Tape's avatar here

or a low-res imitation like Alien Syndrome)) with much worse ratios compared to "byte compressors", the result of very limited number of unique bytes for most of the screen area and less (if any) correlation between the adjacent pixels.

Also pics with less regular dithering (like Floyd-Steinberg) may go either way, but usually the chunky method is good enough. .

Re: What is the best routine for graphics compression?

Posted: Mon Jan 06, 2020 5:15 pm

by catmeows

Lethargeek wrote: ↑Tue Dec 24, 2019 3:14 pm

catmeows wrote: ↑Tue Dec 24, 2019 10:26 am

black-flag-deck:

I couldn't find this by name, but i suspect it's half empty or overly regular.

Indeed, it is.

As i planned to make a compressor for an arbitrary rectangular area

Ah, it is pitty you didn't. The benchmarks for full screen Are intetesting but I think for in-game graphics, smaller pictures are more relevant. And LZ variants have really hard times with small screens, especially when they have fixed offset sizes.

Also pics with less regular dithering (like Floyd-Steinberg) may go either way, but usually the chunky method is good enough. .

I understand but random dither are hard to pin in general, so I don't sebe it as a problem.

To be honest I'm quite surprised how well LGK works.

One thing I would really like to try one day is proper context -> prediction -> range coder packer.

I mean using pixels on west, north-west,north to form a 3-bit context and kind of range coder. It would be inevitably slow (I did some estimations and for 8-bit probabilities multiplying 16-bit limits I got liitle less than 1000 t-states per pixel -> about 400 ~ 500 bytes / sec) but it would really catch the cases where you have small illustration + text on screen.

Re: What is the best routine for graphics compression?

Posted: Mon Jan 06, 2020 7:51 pm

by Lethargeek

catmeows wrote: ↑Mon Jan 06, 2020 5:15 pmAh, it is pitty you didn't. The benchmarks for full screen Are intetesting but I think for in-game graphics, smaller pictures are more relevant. And LZ variants have really hard times with small screens, especially when they have fixed offset sizes.

maybe i will address this in future versions, but right now i have too little free time when my brains are in working condition

catmeows wrote: ↑Mon Jan 06, 2020 5:15 pmI understand but random dither are hard to pin in general, so I don't sebe it as a problem.

fun fact: once i found one particularly bad dithered specimen and later it turned out the source was a greyscale picture with 8x1 wide pixels

and another: serious pc arithmetic coding software failed at the EFMB title screen (an example of "abnormal correlation") even worse

catmeows wrote: ↑Mon Jan 06, 2020 5:15 pmTo be honest I'm quite surprised how well LGK works.

and it still suboptimal very much!

Re: What is the best routine for graphics compression?

Posted: Tue Jan 07, 2020 6:03 pm

by Lethargeek

catmeows wrote: ↑Mon Jan 06, 2020 5:15 pmOne thing I would really like to try one day is proper context -> prediction -> range coder packer.

I mean using pixels on west, north-west,north to form a 3-bit context and kind of range coder. It would be inevitably slow (I did some estimations and for 8-bit probabilities multiplying 16-bit limits I got liitle less than 1000 t-states per pixel -> about 400 ~ 500 bytes / sec) but it would really catch the cases where you have small illustration + text on screen.

do you have any estimates about the possible zx decompressor size?

Re: What is the best routine for graphics compression?

Posted: Tue Jan 07, 2020 9:38 pm

by catmeows

Lethargeek wrote: ↑Tue Jan 07, 2020 6:03 pm

catmeows wrote: ↑Mon Jan 06, 2020 5:15 pmOne thing I would really like to try one day is proper context -> prediction -> range coder packer.

I mean using pixels on west, north-west,north to form a 3-bit context and kind of range coder. It would be inevitably slow (I did some estimations and for 8-bit probabilities multiplying 16-bit limits I got liitle less than 1000 t-states per pixel -> about 400 ~ 500 bytes / sec) but it would really catch the cases where you have small illustration + text on screen.

do you have any estimates about the possible zx decompressor size?

Not much, I guess. 200 - 250 bytes perhaps.