- Use a complex tool like Exomizer, that will compress your data as much as possible, but then you will need to use a slower larger routine to decompress it.

- Use a simpler tool like ZX7, that gives you a small routine to decompress your data much faster, but then your compressed data won't be so small.

Yes, it is! It's called ZX0

The full source code and documentation is here:

https://github.com/einar-saukas/ZX0

The only noticeable difference from ZX7, is that the cross-platform ZX0 compressor can take several seconds to compress data. For this reason, ZX0 compressor provides an extra option "-q" (quick mode) for faster, non-optimal compression. Thus when you need to repeatedly modify, compress and retest a certain file, you can use option "-q" to speed up this process, then later remove this option to compress even better. Except for this, everything else works exactly the same in ZX0 and ZX7. In particular, the standard ZX0 decompressor has 82 bytes only, and it decompresses data at about the same speed as ZX7.

TEST RESULTS

To ensure that my test results are not biased, I will be posting here a comparison between different compressors using someone else's data. This way, I can be sure I'm not giving preference (even involuntarily) to the kind of data that would conveniently work better for ZX0.

I just found quite a few interesting articles on the web that compare Z80 data compressor results. However it seems the dataset used in these comparison is only available for download in one of them:

https://hype.retroscene.org/blog/dev/740.html

This is an excellent article written by introspec. It's in Russian but Google translate does a good job here, so I recommend reading it. I hope he won't mind that I will use his dataset to complement his comparison!

This is the original table presented in this article:

Code: Select all

unpacked ApLib Exomizer Hrum Hrust1 Hrust2 LZ4 MegaLZ Pletter5 PuCrunch ZX7

Calgary 273526 98192 96248 111380 103148 102742 120843 109519 106650 99041 117658

Canterbury 81941 26609 26968 31767 28441 28791 34976 31338 30247 27792 32268

Graphics 289927 169879 164868 173026 169221 171249 195544 172089 171807 169767 172140

Music 151657 59819 59857 62977 60902 62678 77617 62568 63661 63977 66692

Misc 436944 252334 248220 262508 251890 255363 293542 261396 263432 256278 265121

TOTAL: 1233995 606833 596161 641658 613602 620823 722522 636910 635797 616855 653879Code: Select all

ZX7 ZX0-q Exomizer3 ZX0

Calgary 117658 116685 95997 99993

Canterbury 32268 30725 26365 26337

Graphics 172140 164692 163915 163647

Music 66692 61923 58586 58388

Misc 265121 249638 245434 244252

TOTAL: 653879 623663 590297 592617The Calgary test is a subset of the "classic" Calgary corpus. Exactly 7 out of 8 files in this subset are pure text, with an average size of 36Kb each. Although this is an interesting test, it doesn't represent the typical content you need to compress in a Spectrum. What kind of Spectrum program needs 36Kb of compressed text? Perhaps a text-only adventure, but in this case you would either compress it separately as multiple smaller blocks, or use a dictionary-based custom compression so you could access sentences individually.

The Canterbury test files have more diversity, but they are not typical Spectrum content either. The best representation for the kind of data that needs to be compressed in Spectrum programs are the remaining test cases: Graphics (Spectrum screens, etc), Music (AY, etc) and Misc (tape and disk images). In these test cases, ZX0 worked noticeably better.

However I'm not saying that ZX0 will always compress better than Exomizer3 in practice. My other tests seem to indicate that Exomizer3 works better in a few practical cases and ZX0 in others. The difference is so close, that it's hard to choose a winner.

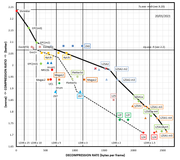

The real advantage of ZX0 is to achieve about the same compression as Exomizer3, but using a much smaller faster decompressor.

So give it a try! Have fun